|

| Are you ready to harness the full power of the event system? |

Go back to the post I made about NEURO and the structure of NullpoMino. I noted how NEURO was going to be the center of the design. In the implementation that diagram is exactly how it looks right now (except we haven't implemented the network yet, so that part isn't available). However, this isn't the limit of what we can do with NEURO. It's not even close.

I mentioned that NEURO was the central hub for all event passing. Its purpose is to pass around events... but even then, it still receives some. When you enter inputs through your keyboard, mouse, or joystick, there are events created by the input systems which NEURO is actually a listener for. This makes NEURO a pawn in its own event-subscriber network (albeit a very boring one because it only has one subscriber). I designed NEURO to be a star network on purpose, because I felt that it would be too much to add things like irssi-style blocking and ordering into the mix. This design is simple and powerful. I hadn't realized at the time how powerful it really is. The key facet is that NEURO is already an event subscriber... nothing stops it from listening for NEURO events!

The implication is that a NEURO instance can also be a plugin to a larger NEURO network!

This doesn't seem very useful on the surface, but it is actually extremely powerful with such a simple design. There is no limit to the complexity you can create, now! If you so desire, you can create and release plugins that are entire networks of their own. If you had a couple hundred petabytes of memory, you could potentially simulate the entire human brain!

"Now this sounds all well and good, but what are some applications of this?" This is a harder question to answer, but there are a few things I can think of offhand that would make use of this sub-NEURO paradigm. First, I am going to build a network right in front of your eyes. Don't blink, you might miss something important! This might be a little difficult to follow, so I'm going to have to give some backstory as well.

Part I: Return of the Randomizer Analyzer

"Return of the... I never realized it existed to begin with!"

Well, it did, as a project on my hard drive. I'm pretty interested in randomizer theory, and I've done quite a bit of work in figuring out the theoretical and experimental implications of certain randomizers. Sadly, most of the stuff I'd published has been lost to the sands of the Internet. It may come back someday, but don't get your hopes up.

My first run-in with this sort of stuff was back during NullpoMino 6.3. In an Unofficial Expansion, I had written a sequencer that would spit out the sequence given by any randomizer for any number of pieces, as a convenience to TASers who wanted to do some planning past the number of next pieces they had with their rule.

|

| Not the original, but close enough. |

I thought this was pretty cool, and that I could use it to experimentally determine the properties of randomizers. So, I upgraded it with some neat analysis functionality. It counted the number of each piece, the number of each digraph (sequence of two pieces), the number of intervals between pieces of the same type, and the cumulative interval curve.

By running extremely long sequences through the analyzer, I could get a look at how it acted without doing any math or playing a single game. It was a fun tool to test new ideas. However, it was a piece of crap. NullpoMino's randomizers were built to generate an entire sequence at once, and they generally generated a sequence of 1400. My standard for a "long sequence" was 63 million pieces. This means I'm going to be using a lot of memory. That's not acceptable.

I didn't really come back to this until much later, when I wrote my own sequence analyzer that was completely separate from NullpoMino. I designed this one better, and it wasn't a piece of crap. It also only generated as much as it needed and cached a lot of things, so it was fairly fast (much faster than the old one, especially on long sequences). The randomizer framework and almost all of the randomizers I'd written for this were eventually ported into NullpoMino, and that's why there are so many random randomizers written into the game.

I was able to do some things I wasn't able to do in Nullpo, like make randomizers that were parametric, so users could fool around with whatever they wanted. Also, running sequences of 63 million was pretty cheap now, since I was really never storing more than 20 pieces in memory.

|

| 3 hours and 20 minutes of analysis. |

Tier 0 network: Just a plugin

I could pretty much just plug my current analyzer into NEURO and do whatever with it. It wouldn't be very difficult, and it would work. I'd probably tweak it to use the built-in Nullpo randomizers, which are still based on my framework, with perhaps the parametric randomizers somewhere else as a bonus. The network would look like this:

|

| As basic as can be. |

Tier 1 network: Threads, threads, threads

If I could improve on my last analyzer, surely it would be in the area of speed. How might I do this? Easily, by multithreading: most of your computers have multi-core processors (and even some of your phones!). The task pretty transparently splits into two parts: generation and analysis. I can have a generator thread which spits out pieces to the analyzer thread, which makes sense of them. These threads must communicate in some way in order to pass information back and forth, since it's not all in the same place anymore. The structure of NEURO allows a very simple solution: make them plugins!

But it's not quite that simple; it would be strange to have two separate plugins to do one task. That's not very self-contained and it is messy. It also puts a lot of noise through the main NEURO, which might not be desirable as it could slow down the processing of other events (such as input events) by blocking the dispatch function. There's also no central authority to coordinate the actions of the two plugins. But, we can make it work. How? We have a central plugin which links both of them together... and that plugin is also a small, self-contained NEURO!

|

| Now we're talking. |

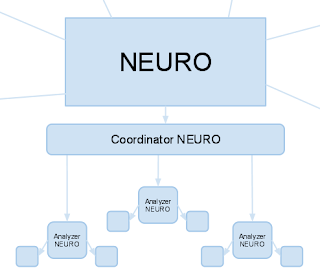

Tier 2 network: Expanding your mind in parallel

We can still do better than that. Suppose we wanted to compare a number of different randomizers. I've had to do this sort of thing myself, running a bunch of different simulations and then putting all their data in a nice big Excel sheet and graphing them all. There's a better, more automatic way, though. The basic idea is that you have a number of these autonomous analyzer NEUROs working separately on their own things, while the main plugin coordinates all of them and has the ability to synthesize all the data together. Instead of running a number of Tier 1 plugins and still needing to do some extra work to compare them, we can hide that behind another layer of indirection.

The user can control all of the analyzers from one place, and the main plugin doesn't even need to have any analyzers on hand when it is idling, either. The network will, in a sense, generate its own branches as necessary. The Tier 1 network was fixed, always having two leaves. But in this network, you can run as many simultaneous simulations as you want, and they'll all report back to the main plugin when they are finished. So, we have this:

|

| Truly, this is what systems were meant to be. |

Part II: Generative networks: MapReduce-poMino

"Well all that is starting to sound awfully familiar..."

You're right. It's pretty much a special case of MapReduce, a framework for massively parallel processing developed at Google. Basically, we're doing scientific computing in our video games. What now, Jack Thompson?

In the case above, the complexity is pretty hard-limited. But, someone may find a case where the network will recursively generate more sub-networks. MapReduce solves problems by chunking them into smaller and smaller bits until they can be easily solved, and then collects the answers as they are returned back up the tree that was created. NEURO could potentially facilitate the same thing. This leads to networks that can theoretically be infinite in complexity... That's an awful lot to think about.

|

| It's like Inception inside Inception inside Inception inside... |

Part III: Move it Like irssi

I said before that I didn't want to have something like irssi's system because it's too complicated and doesn't order that much benefit. Fortunately, I was wrong and I implemented it by accident (kind of, the actual implementation is pending).

irssi's plugin system has ordering and blocking. Ordering means that some plugins are guaranteed to receive events before other plugins, or some will get them last. Blocking means that plugins that get events first can stop the events from being sent to plugins that would get them later. irssi's system is very powerful as a result. I felt that putting this all in one place added a bunch of needless complexity, but there's actually a very simple way to do it.

irssi's standard ordering procedure places things in three groups, termed first, normal, and last. Obviously, the "first" group gets events before the "normal" group, and so on. I thought adding this sort of functionality ad hoc was not really the best way to do it, and I couldn't see any reason why it would be powerful enough to implement over the simpler star network design. Then, I realized that this design is very simple even without dealing with NEURO internals.

|

| Color-coding not included. |

Blocking would take some more bookkeeping and/or a NEURO extension, but it would still not be very difficult to implement.

Part IV: Fun structures

This is more me going crazy-silly than anything else, but I imagine there are some other complicated things you can do with all this power. For example, everything we've explored so far has really only looked at the event-subscriber pattern in one direction. But, there's nothing stopping it from being bidirectional, or even cyclic across a number of NEUROs! I don't know of any particular use for this paradigm, but one might crop up someday. Someone may find it beneficial to have two halves of a plugin be essentially separate, but which can alert each other as necessary to whatever is going on and send events to dispatch to the other half's plugins. I don't even know what the uses of a cyclic process are, but they might exist somewhere.

What I mean to say is that you can do an awful lot by linking together NEUROs and plugins and hybrids of the two in creative ways. You can create entire networks that are encapsulated in a single plugin; ones that can do very powerful things using very simple code. Hopefully we will see some of this when NullpoMino 8 hits the shelves and user content can come alive.

At some point I'm going to start documenting the plugins we are writing, but right now that would be boring because all we have is the game itself and a not-nearly-complete nullterm. Look out for this sort of thing in the future.

No comments:

Post a Comment